Function-space Diffusion

Posterior Sampling:

Guided Diffusion Sampling on Function Spaces with Applications to PDEs

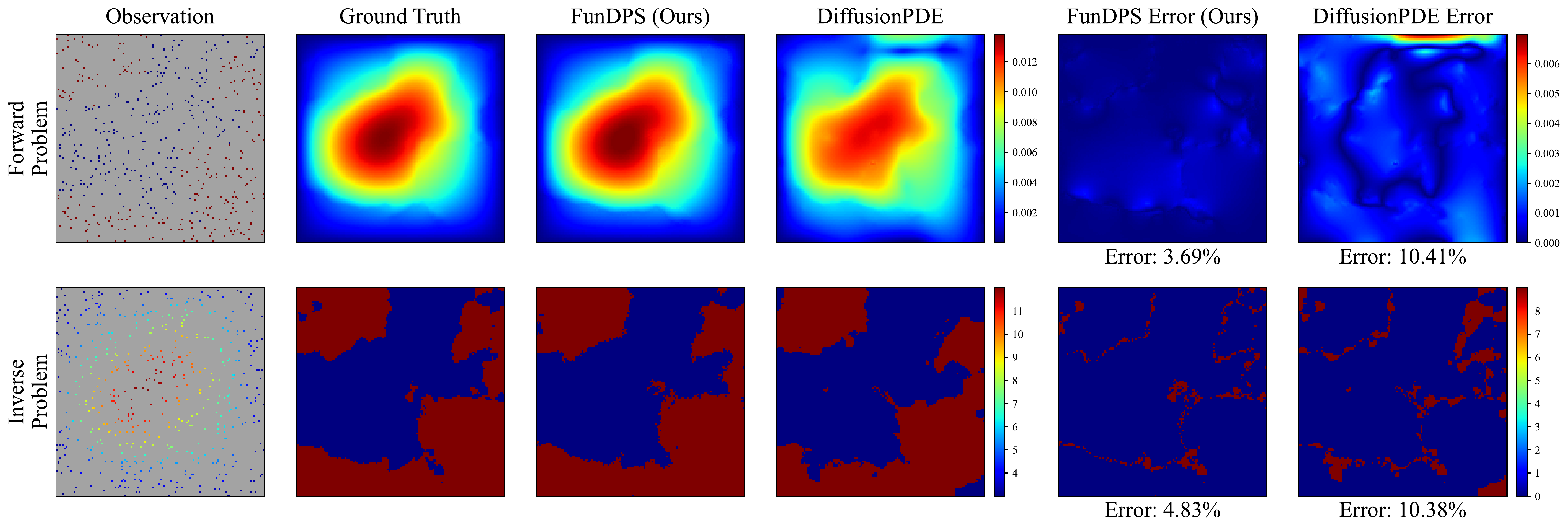

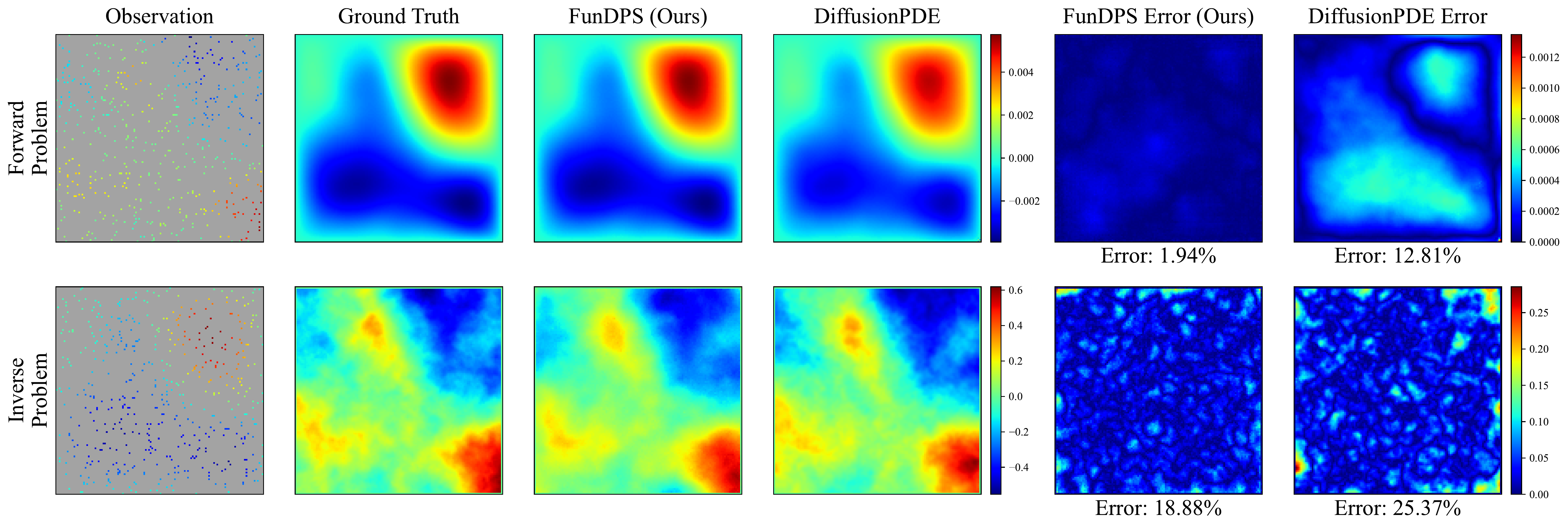

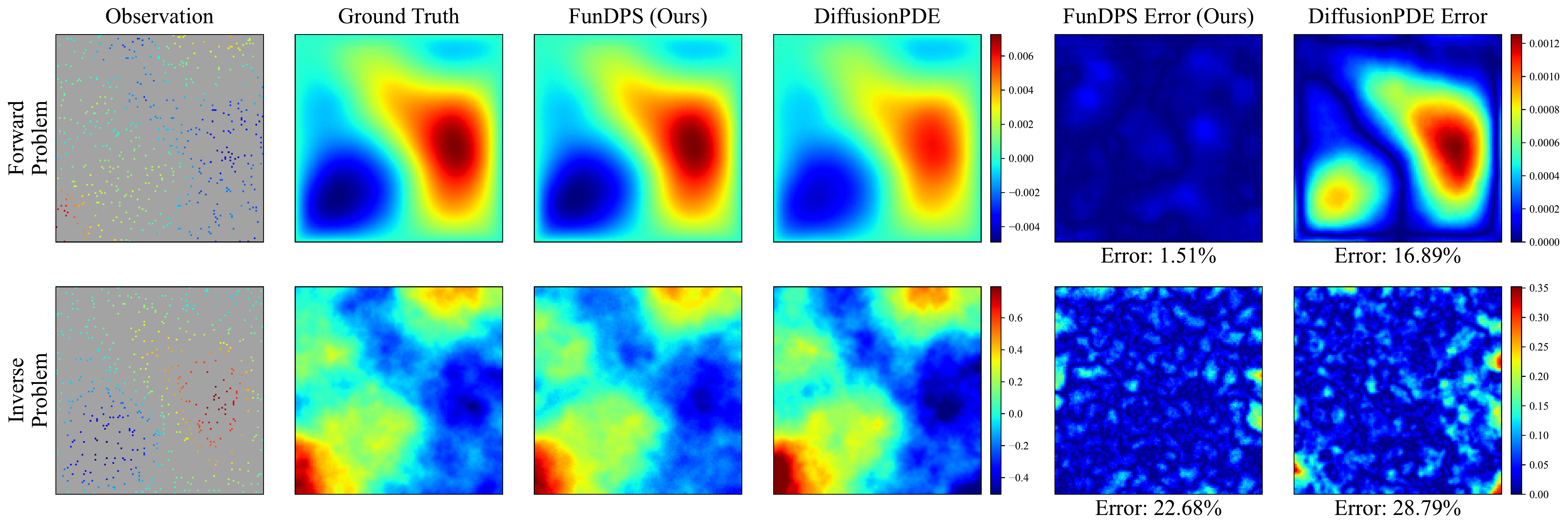

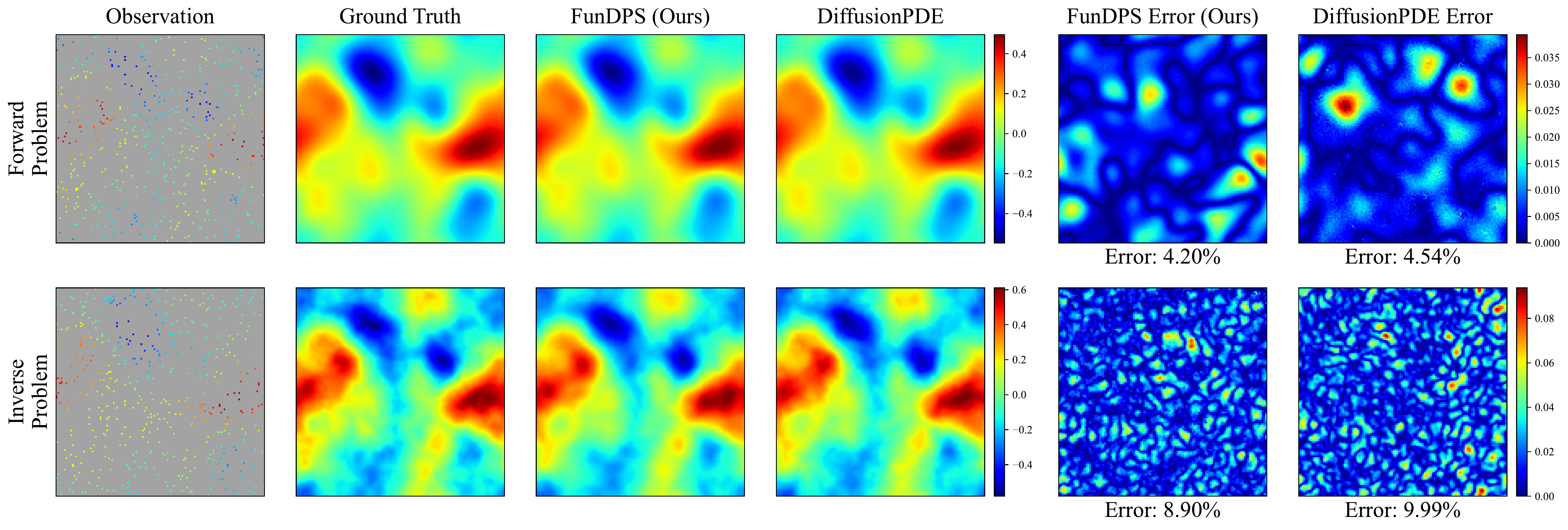

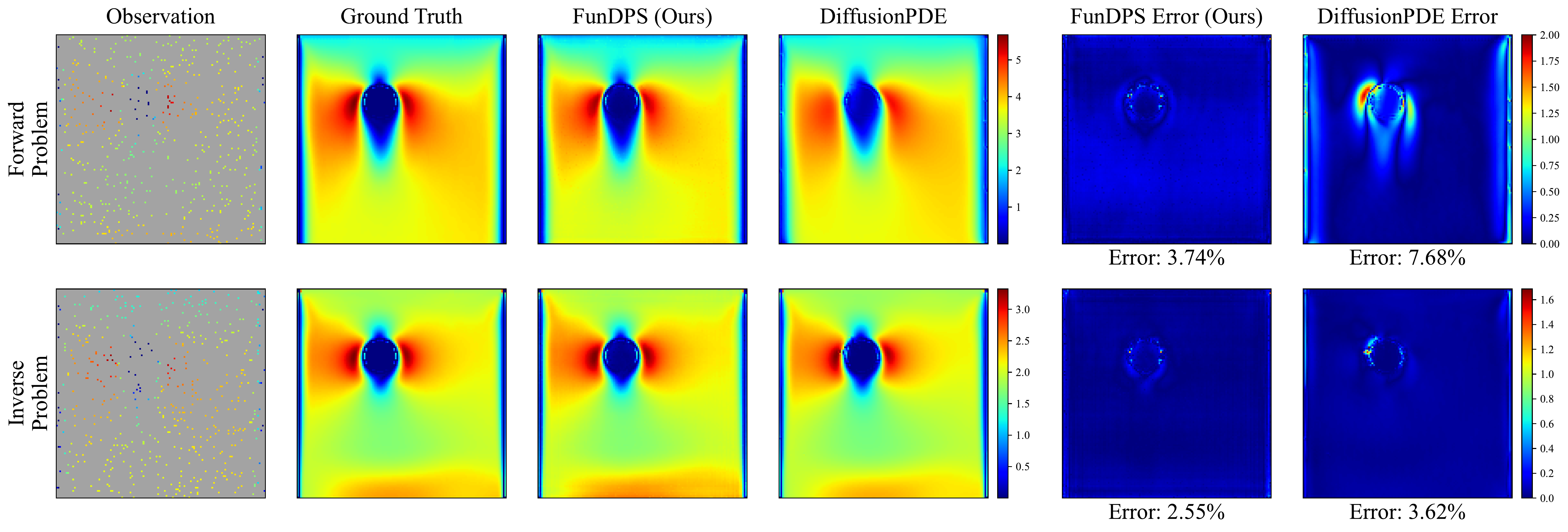

Comparison between our method (FunDPS) with the state-of-the-art diffusion baseline, DiffusionPDE, on the Darcy Flow and Helmholtz problems. The left column shows the sparse observation measurements (3% of total points), while the other two columns display the absolute reconstruction error of our method and DiffusionPDE, respectively. FunDPS achieves superior accuracy with an order of magnitude fewer sampling steps.

Abstract

We propose a general framework for conditional sampling in PDE-based inverse problems, targeting the recovery of whole solutions from extremely sparse or noisy measurements. This is accomplished by a function-space diffusion model and plug-and-play guidance for conditioning. Our method first trains an unconditional discretization-agnostic denoising model using neural operator architectures. At inference, we refine the samples to satisfy sparse observation data via a gradient-based guidance mechanism.

Through rigorous mathematical analysis, we extend Tweedie's formula to infinite-dimensional Banach spaces, providing the theoretical foundation for our posterior sampling approach. Our method (FunDPS) accurately captures posterior distribution in function spaces under minimal supervision and severe data scarcity.

Across five PDE tasks with only 3% observation, our method achieves an average 32% accuracy improvement over state-of-the-art fixed-resolution diffusion baselines while reducing sampling steps by 4x.

Furthermore, multi-resolution fine-tuning ensures strong cross-resolution generalizability and speedup. To the best of our knowledge, this is the first diffusion-based framework to operate independently of discretization, offering a practical and flexible solution for forward and inverse problems in the context of PDEs.

Method Overview

FunDPS consists of two main stages: training an unconditional function-space diffusion model, and guided posterior sampling for inverse problems.

The sampling and training pipelines of FunDPS. During inference, we utilize a standard reverse diffusion approach with additional FunDPS guidance to drag the samples to the posterior. During training, the model is based on a U-shaped neural operator, and Gaussian random fields are used as the noise sampler to ensure consistency within function spaces.

Key Contributions

1. Infinite-dimensional Tweedie's formula: We develop a novel theoretical framework for posterior sampling in infinite-dimensional spaces by extending Tweedie's formula to the Banach space setting. This generalization enables plug-and-play guided sampling in infinite-dimensional inverse problems.

2. Multi-resolution conditional generation pipeline: We propose a function-space diffusion posterior sampling method with multi-resolution training strategy that reduces GPU training hours by 25%. Multi-resolution inference yields 2x speedup while maintaining accuracy.

3. State-of-the-art performance: Extensive experiments on five challenging PDEs demonstrate that FunDPS reduces average error by roughly 32% compared to state-of-the-art diffusion-based solvers, while requiring 4-10x fewer sampling steps and cutting inference wall-clock time by 25x.

Experimental Results

Main Results on Five PDE Tasks

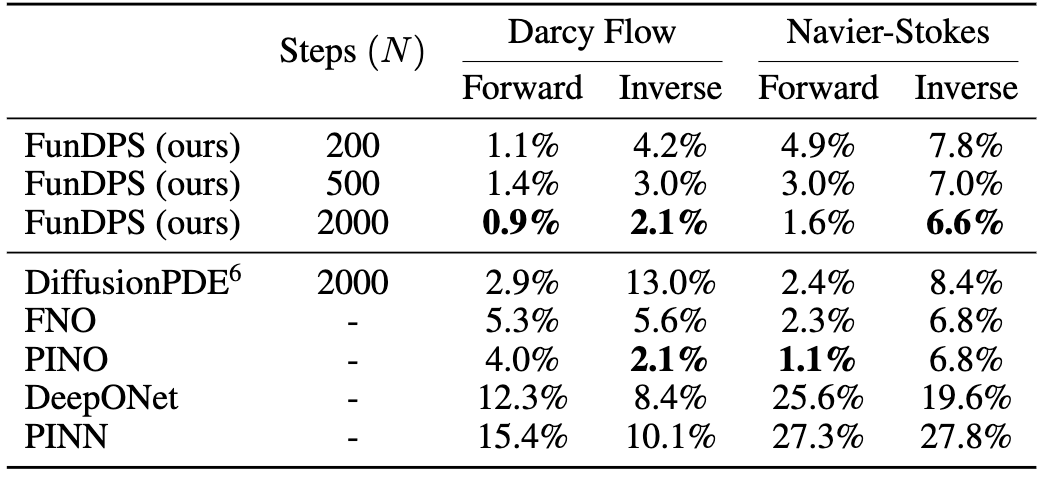

We evaluate FunDPS on both forward and inverse problems across five challenging PDE tasks: Darcy Flow, Poisson, Helmholtz, Navier-Stokes, and Navier-Stokes with Boundary Conditions. Even with severe occlusions where only 3% of function points are visible, we are able to reconstruct both states effectively. Our approach consistently achieves the best results among all baselines with the lowest error rates.

Comparison of different models on five PDE problems (in L² relative error). FunDPS achieves 32% higher accuracy than DiffusionPDE with one-fourth the number of sampling steps.

Qualitative Comparisons

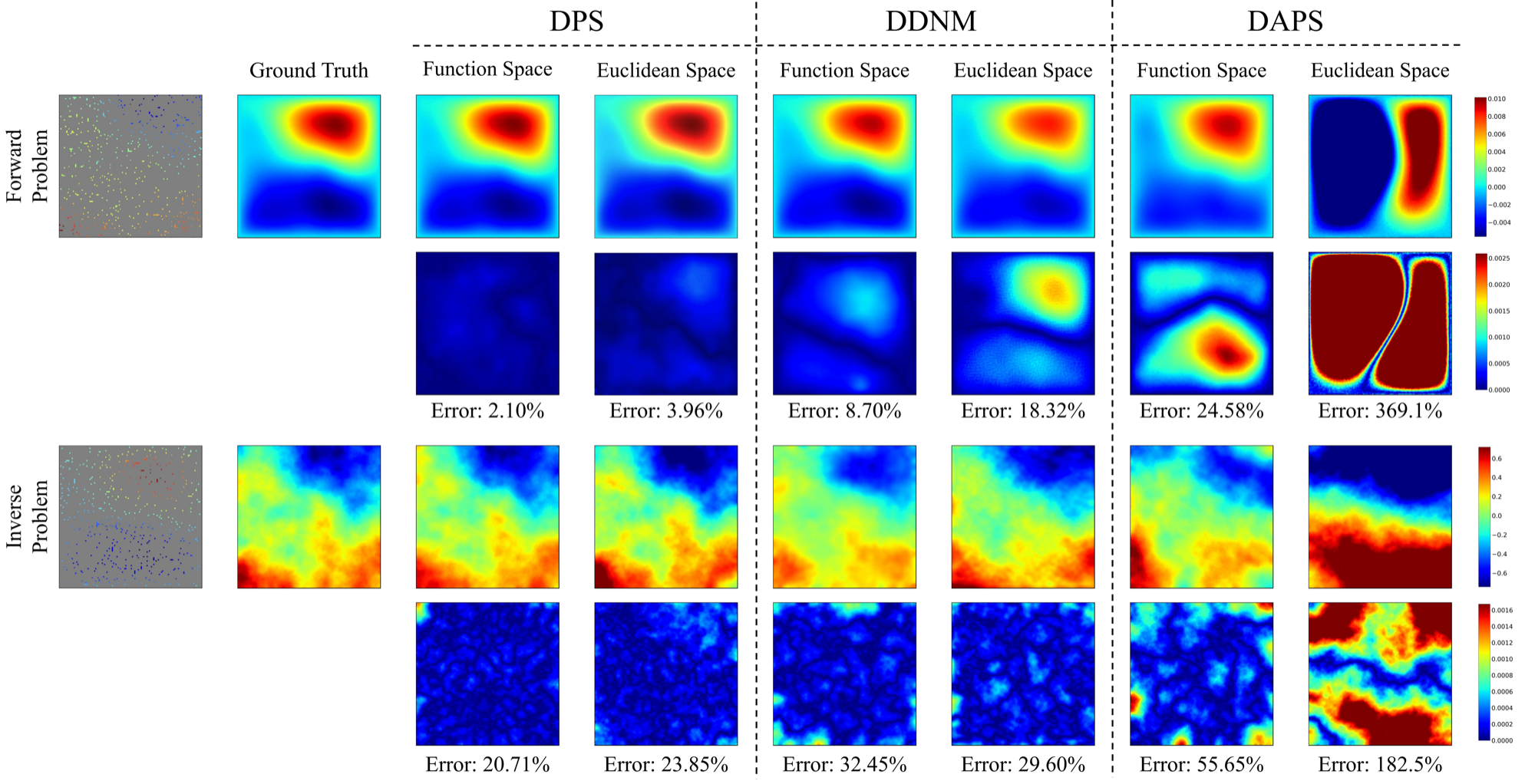

We provide qualitative comparisons showing the reconstruction quality of FunDPS versus DiffusionPDE across different PDE tasks. The visualizations demonstrate that FunDPS produces significantly more accurate reconstructions with lower error, particularly in capturing fine-grained spatial details. Use the arrows or dots to navigate between different PDE comparisons.

Visual comparisons across five PDE tasks. From left to right in each image: Ground Truth, FunDPS (ours), and DiffusionPDE baseline.

Efficiency and Speedup

Sampling Steps Comparison

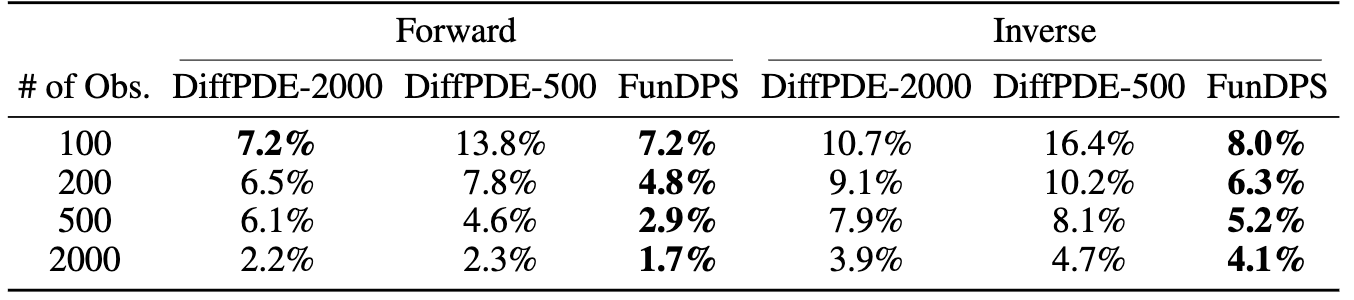

We compare the inference time and accuracy of FunDPS with DiffusionPDE. With a 200-step discretization of the reverse-time SDE, our method achieves superior accuracy with only one-tenth of the integration steps compared to DiffusionPDE. When we increase the number of steps, FunDPS further reduces the error, suggesting that the scaling law of inference time may hold for solving PDE problems with guided diffusion.

(a) Comparison of FunDPS and DiffusionPDE in terms of accuracy and inference time with varying step sizes; (b) Demonstration of multi-resolution inference pipeline.

Multi-Resolution Inference Speedup

Thanks to its multi-resolution nature, neural operators can be trained on multiple resolutions and applied to data of different resolutions. We propose ReNoise, a bi-level multi-resolution inference method that performs most sampling steps at low resolution and upsamples only near the end. With 80% of steps performed at low resolution, ReNoise sustains similar accuracy while yielding a further 2x speed improvement, achieving 7.5s/sample—25 times faster than DiffusionPDE.

Comparison of the accuracy of FunDPS with ReNoise under different ratios of low-resolution inference steps.

Actual Runtime Performance

Our model averages 15s/sample for 500 steps (without multi-resolution inference) on a single NVIDIA RTX 4090 GPU, while DiffusionPDE takes 190s/sample for 2000 steps on the same hardware and the same 128×128 discretization. This superior performance can be attributed to two factors: our efficient implementation increases our steps per second, and we require significantly fewer steps.

Ablation Studies

We conduct comprehensive ablation studies to validate our design choices. See Appendix H.1 to H.8 for full details.

Citation

@inproceedings{yao2025fundps,

title={Guided Diffusion Sampling on Function Spaces with Applications to PDEs},

author={Jiachen Yao and Abbas Mammadov and Julius Berner and Gavin Kerrigan and Jong Chul Ye and Kamyar Azizzadenesheli and Anima Anandkumar},

year={2025},

booktitle={Advances in Neural Information Processing Systems},

}Acknowledgments

The authors want to thank Christopher Beckham for his efficient implementation of diffusion operators. Our thanks also extend to Jiahe Huang for her discussion on DiffusionPDE, and Yizhou Zhang for his assistance with baselines. Anima Anandkumar is supported in part by Bren endowed chair, ONR (MURI grant N00014-23-1-2654), and the AI2050 senior fellow program at Schmidt Sciences.